An Introduction to Probability and Random Variables for Pattern Recognition 1

Axioms

The theory of probability forms the backbone for learning and researching in statistical pattern recognition. Probability theory is one of the most amazing fields of mathematics, and it is based on three simple axioms.

-

The first is that probability of event , represented as must be greater than or equal to zero.

-

Next, the probability of and , is the probability of plus the probability of if and are mutually exclusive. Which means and cannot happen together

-

And finally, the probability of the entire sample is equal to .

From these three axioms, we see that probability theory is an elegant self-checking mathematics with no event less than and the sum of events must equal . Also, observe that the only arithmetic operator involved is the (plus) sign. Howbeit, when we begin to recombine and use these axioms, all other arithmetic operators begin to emerge.

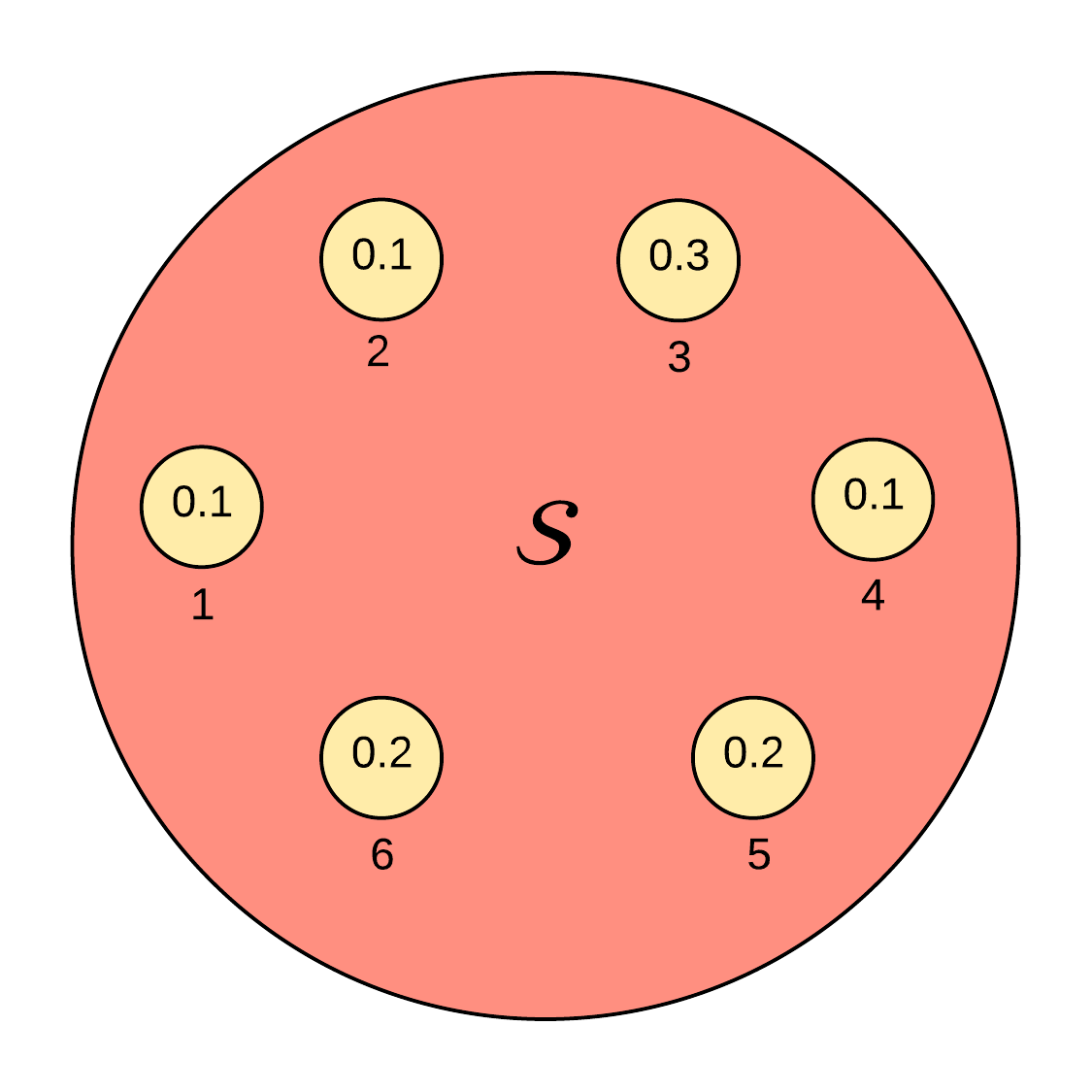

Suppose we have an event space with the probabilities as shown in Figure 1 below.

Let us check if the above probability sample space is valid:

- Are all events greater than zero? Yes.

- Is the sum of the sample space ? Yes.

- If and are mutually exclusive, is their combined probability the sum of their individual probabilities? Yes.

Dependence: Information Given

The probability of event , , given event , has already occurred is written as , where the symbol “” represents “given”. is the probability of event and , divided by the probability of event , . Formally this is defined as:

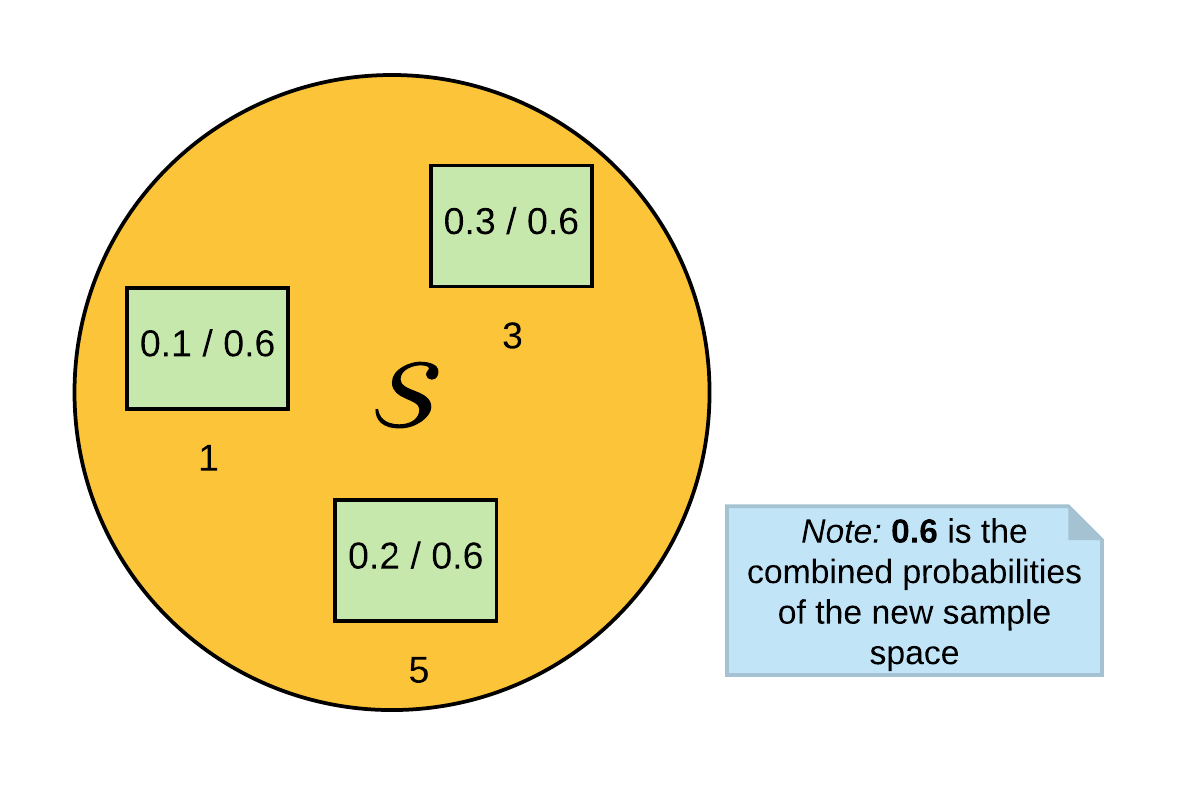

This formula is quite intuitive. Suppose we want to find the probability of given that the event is odd, . If we select the odd numbers from the probability space (as shown in Figure 2 below), the probability ceases to be a valid probability space. So, we need to normalize the probabilities by dividing each given event by the sum of their combined probabilities. By normalizing the probability space, we can intuitively select the probability of obtaining a given that the events are odd. This is the dependence property of probabilities.

Solving for , it is now evident from the normalized sample space that the result is .

Dependent probabilities are formally written as:

Independence

Two events and are independent events if the probability of , is entirely useless or has no information with respect to the probability of , . Suppose, we are asked the probability of the event occurring given that it snowed in Lagos, i.e., . This expression clearly results in the , because it is irrelevant whether it snowed in Lagos or not in finding the probability of . This example is the independent property of probabilities. Probability independence is formally expressed as:

Total Probability

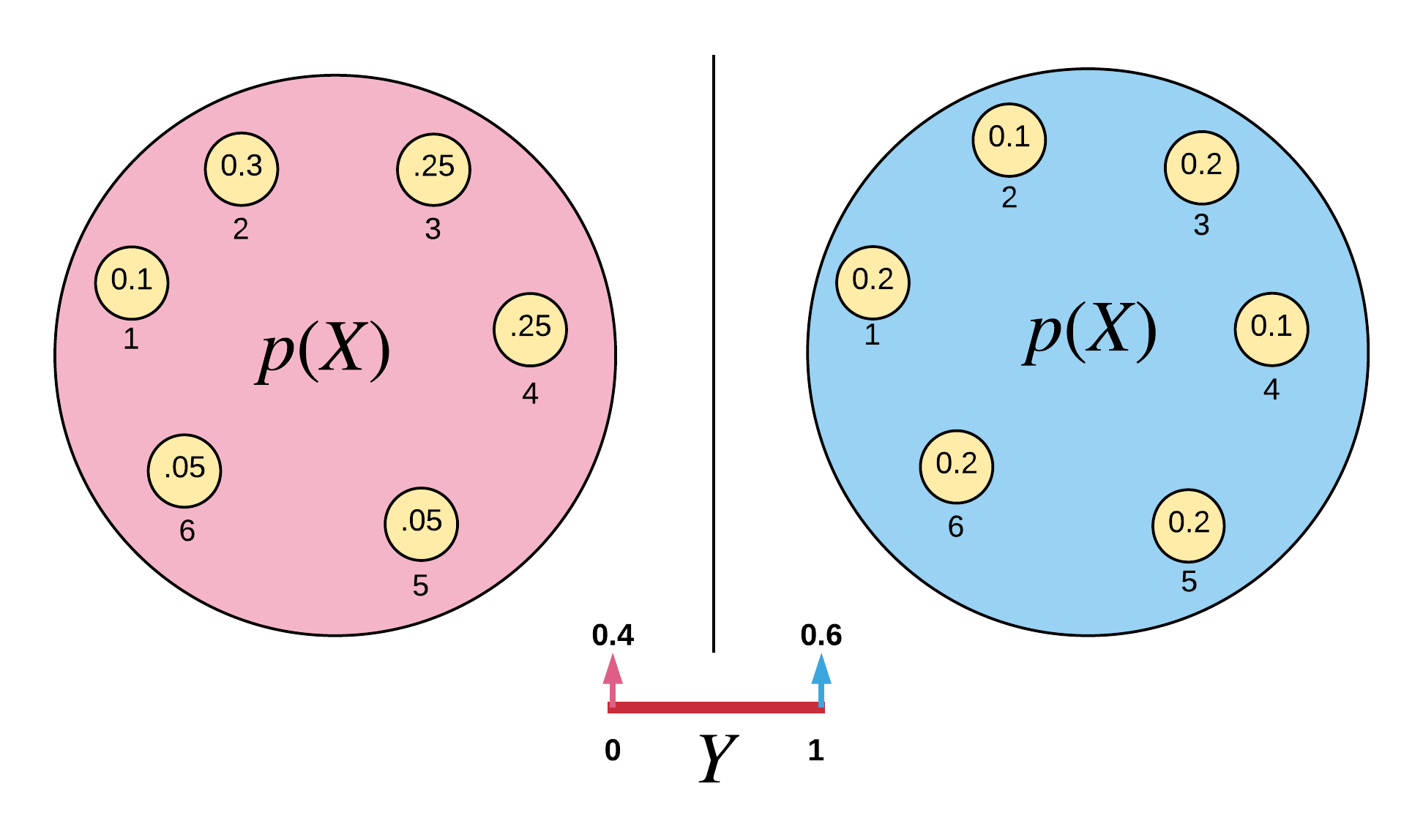

Suppose that the probability of event , occurs in two different distributions. We are also given a random variable , and has only two outcomes . When , draw from the first distribution (left of Figure 3), and when , draw from the other distribution (right of Figure 3). See Figure 3 below for the graphical representation.

Suppose we are asked:

- What is the probability that , given , . We can obtain our answer as 0.25 by examinining the probability distribution for when .

- What is the probability that , given , . We also obtain our answer as 0.1 by examinining the probability distribution for when .

Now, suppose we are asked the probability that , i.e., The answer to this is not obvious from the probability space because we are not told the outcome of . However, we can derive the total probability of if or . To do this, recall our dependence formula in Equation \ref{eqn:dependence}.