The Exploration-Exploitation Trade-off

The ideas of exploration and exploitation are central to designing an expedient reinforcement learning system. The word “expedient” is a terminology adapted from the theory of Learning Automata to mean a system in which the Agent (or Automaton) learns the dynamics of the stochastic Environment. In other words, the Agent learns a policy for making actions in a random Environment that is better than pure chance.

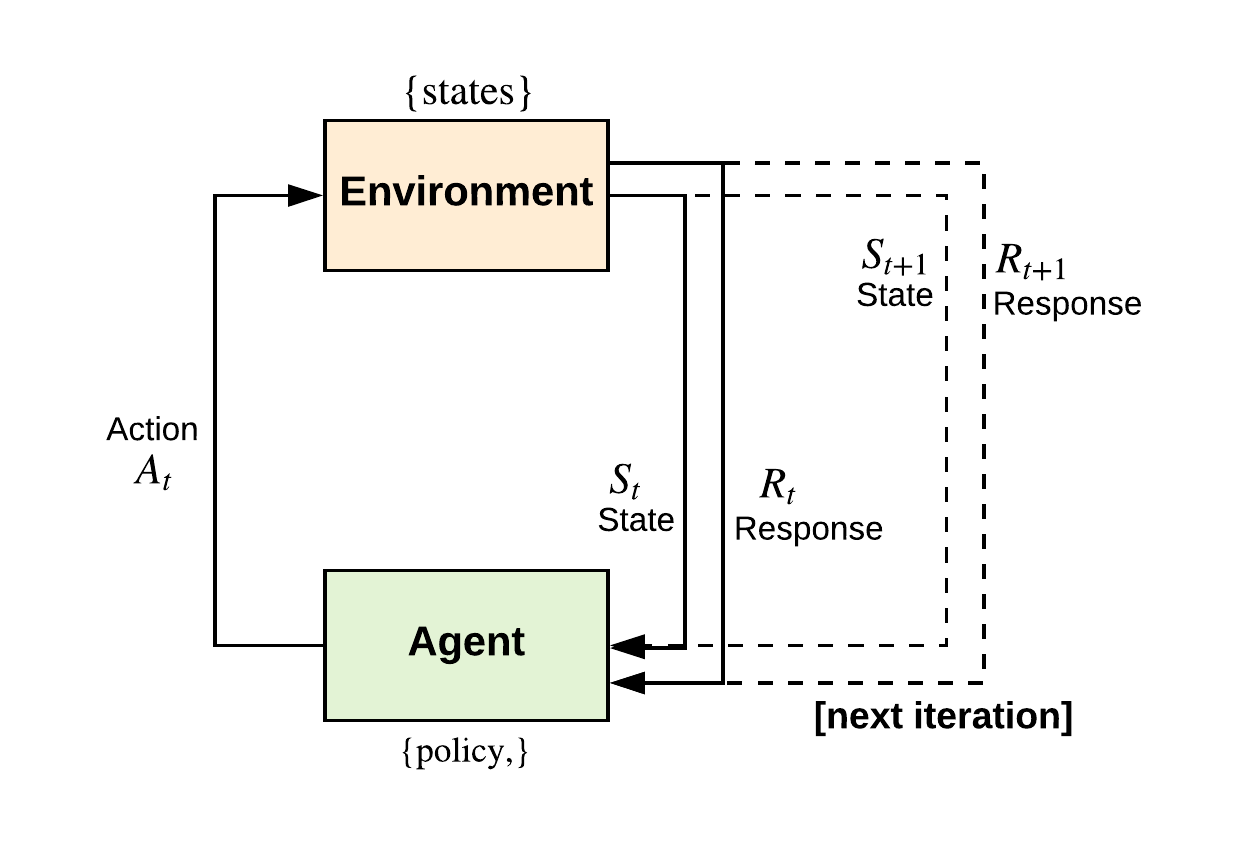

In training an Agent to learn in a random Environment, the challenges of exploration and exploitation immediately arise. An Agent receives rewards as it interacts with an Environment in a feedback framework. In order to maximize its rewards, it is typical for the Agent to repeat actions that it tried in the past that produced “favourable” rewards. However, in order to find these actions leading to rewards, the Agent will have to sample from a set of actions and try-out different actions not previously selected. Notice how this idea develops nicely from the “law of effect” in behavioural psychology, where an Agent strengthens mental bonds on actions that produced a reward. In doing so, the Agent must also try-out previously unselected actions; else, it will fail to discover better actions.

Exploration is when an Agent has to sample actions from a set of actions in order to obtain better rewards. Exploitation, on the other hand, is when an Agent takes advantage of what it already knows in repeating actions that lead to “favourable” long-term rewards. The key challenge that arises in designing reinforcement learning systems is in balancing the trade-off between exploration and exploitation. In a stochastic Environment, actions will have to be sampled sufficiently well to obtain an expected reward estimate. An Agent that pursues exploration or exploitation exclusively is bound to be less than expedient. It becomes worse than pure chance (i.e. a randomized agent).

Multi-armed Bandits

In a multi-armed bandit problem (MAB) (or n-armed bandits), an Agent makes a choice from a set of actions. This choice results in a numeric reward from the Environment based on the selected action. In this specific case, the nature of the Environment is a stationary probability distribution. By stationary, we mean that the probability distribution is constant (or independent) across all states of the Environment. In other words, the probability distribution is unchanged as the state of the Environment changes. The goal of the Agent in a MAB problem is to maximize the rewards received from the Environment over a specified period.

The MAB problem is an extension of the “one-armed bandit” problem, which is represented as a slot machine in a casino. In the MAB setting, instead of a slot machine with one-lever, we have multi-levers. Each lever corresponds to an action the Agent can play. The goal of the Agent is to make plays that maximize its winnings (i.e. rewards) from the machine. The Agent will have to figure out the best levers (exploration) and then concentrate on the levers (exploitation) that will maximize its returns (i.e. the sum of the rewards).

Right: Multi-armed bandits. The slot machine has multiple (n) arms, each returning a numerical reward when played. In a MAB problem, the reinforcement agent must balance exploration and exploitation to maximize returns.

For each action (i.e. lever) on the machine, there is an expected reward. If this expected reward is known to the Agent, then the problem degenerates into a trivial one, which merely involves picking the action with the highest expected reward. But since the expected rewards for the levers are not known, we have to collate estimates to get an idea of the desirability of each action. For this, the Agent will have to explore to get the average of the rewards for each action. After, it can then exploit its knowledge and choose an action with the highest expected rewards (this is also called selecting a greedy action). As we can see, the Agent has to balance exploring and exploiting actions to maximize the overall long-term reward.

Bibliography

- Narendra, K. S., & Thathachar, M. A. (2012). Learning automata: An introduction. Courier Corporation.

- Sutton, R. S., & Barto, A. G. (1998). Reinforcement learning: An introduction. MIT press.